Becoming The AI-Native Cyber VC (Building What We Invest In)

Something big is happening in software right now, and it's not the thing everyone's been talking about for two years.

We're past the "AI will change everything" phase. We're in the "AI is changing everything right now" phase, and most people haven't noticed yet because they're still treating AI like a research assistant instead of an operating system.

At TechOperators, we're a small investment team running an early-stage fund. We see 200+ companies a year. We don't have 20-person analyst teams. And we don't have the luxury of missing the 2-3 companies that will define the next decade of security.

We also invest in cybersecurity founders who are using AI to reimagine their categories from first principles — rewriting SOC operations, rethinking identity, fundamentally changing how security works. It would be hypocritical not to do the same.

So we stopped talking about AI and started building with it.

In a weekend, we went from manually reviewing every inbound opportunity to pre-qualifying 81% automatically — at $0.20 per company. This is that story.

The Genesis: A Living Cyber Map

It started with something simple. Every VC firm has a market map — that PowerPoint slide with 50 logos showing "the landscape." We had one too. It was fine. It was also useless.

Static maps are backward-looking. They show you what already happened, not what's forming. And they're always out of date. You update them quarterly if you're disciplined, never if you're honest with yourself.

In early 2025, we did something weird. We turned our market map into a website: techoperators.com/landscape. We just wanted a better way to track the 200+ companies operating at the intersection of AI and cybersecurity.

Then something unexpected happened. Founders started reaching out asking to be added to the map. Not pitching us, not asking for meetings — just wanting to be on the landscape because other founders and VCs were using it as a reference.

In 2025, we added 213 companies to the landscape. 70 of them came from inbound founder submissions.

That's when we realized: we'd accidentally built a deal sourcing engine in the form of a market map. We were seeing companies 6-12 months before they formally fundraised, not because we were chasing them, but because they came to us.

The landscape became a spark. If we could turn a static PowerPoint into an intelligence system just by making it public and keeping it current, what else could we systematically improve?

Too Much Noise: The 90%+ Problem

Let's talk about what "deal flow" actually means at most VC firms.

A company raises a seed round. A warm intro comes through. A founder's LinkedIn activity suggests they're going stealth. Somehow, a signal appears that there's a company worth evaluating.

At most firms, here's what happens next: An associate or partner sees the signal. They spend half a day googling — who are the founders? What did they do before? Who else is in this space? Is this real traction or just a good deck? They write a memo or bring it to Monday's partner meeting. Partners discuss for 20 minutes: Is this worth a first call? Maybe you reach out, maybe not. Maybe you're days late and they already have a lead.

At 200+ companies per year, that's hundreds of hours just deciding whether to have a first conversation. And 90%+ of those deals aren't a fit. Wrong stage, wrong team, wrong category, wrong timing.

In 2025, we ran this process like everyone else. Daily signals of new cybersecurity startups. Our system auto-populated them into our CRM and posted them to Slack. But partners looked at every single one.

209 companies reviewed in 2025. 14 flagged as "Very Interesting" after human review. That's a 6.7% pass rate.

Which means we spent partner time on 195 companies we really shouldn't have. The bottleneck wasn't sourcing — it was qualification at the point of entry. Most VCs solve this by hiring more associates. We're only a few people, so we needed a different answer.

We Started Building

We built an agentic system that intercepts every deal signal — early-stage funding alerts, stealth founder discoveries, manual submissions — and runs automated research and scoring before a human even looks at it.

The architecture is simple, but you need to get your hands dirty to make it work:

Every new company that hits our radar — whether it's an automated PitchBook feed, a stealth founder flagged by Harmonic.ai, or me typing "check out Acme Security" into Slack at midnight — triggers the same pipeline.

The Data Layer: Getting Everything Into One Place

The first problem was convergence. Deal signals were coming from everywhere:

PitchBook had the broadest coverage but thin data — startup name, funding amount, stage. Affinity enriched company records with team backgrounds and investor relationships. Harmonic.ai caught founders going stealth before they had a company name. Our own landscape database had competitive context on 200+ companies.

We tried building this around Affinity as the hub. Didn't work. Affinity's enrichment only fires when it sees email or calendar interactions with a contact, not when you cold-add a record. And Harmonic is a signal source for us, not a system of record — i.e. we're not logging into it every day the way we live in Slack.

So Slack became the convergence layer. Every signal, regardless of source, posts to #dealflow. The AI agent watches that channel and processes everything that comes through.

The Orchestration Layer: We Chose Microsoft (Yes, Really)

Look, I know. n8n is the darling of the self-hosted crowd. LangChain is what the AI engineers are using. Zapier is what the no-code folks would pick.

We chose Power Automate. Microsoft's workflow automation platform.

Why? Three boring, operator reasons:

We already had it. Microsoft 365 E5 comes with Power Automate. It was just… there.

It handles webhook reliability. Built-in retry logic, error handling, and run history that just works. When a Slack event fails at 2am, I can see exactly why in the morning and replay it. With a self-hosted solution, I'm the one getting paged.

Anyone on the team can maintain it. Visual flow designer means any partner can open it, understand what's happening, and modify it. No Python environment, no Docker containers, no "wait for Kevin to fix it."

Is it sexy? No. Does it work? Yes. Has it failed once in >365 days of production, on a different use case? Also no.

Sometimes the right tool is the boring one that's already in your stack.

Now here's what some of you are already thinking: you're just passing a Slack message to an API and printing the response. Where's the innovation?

Peter Steinberger got the same pushback when he shipped OpenClaw. "This isn't magic, you're just rearranging the UX." His answer: yes, exactly. That's the whole thing. The novel part was integrating the agent into WhatsApp and making it behave like a human would — knowing when to respond, when to shut up, how to not reply to itself in an infinite loop.

Same deal here. Getting the loop right… only fire on real deal signals, skip your own threaded replies, ignore the duplicate events Slack fires when a link unfurls… is the difference between something that changes how you work as a firm, and something that embarrasses you in front of your partners at 10am on a Monday.

Filter 1: Only process messages from #dealflow Filter 2: Skip threaded replies (don't process the AI's own responses) Filter 3: Skip message edits (Slack fires duplicate events when links unfurl)

Without these, the AI responds to itself, which triggers another response — infinite loop. Ask me how I know.

We also figured out we needed to send the entire Slack event payload to Claude, not just the text:

Originally, we were just passing triggerBody()?['event']['text']. Works fine for manual posts ("check out Acme Security"). Completely breaks for PitchBook and Harmonic messages because they use Slack Block Kit — all the structured data (company name, funding, founders) lives in the blocks array, not the text field.

Claude parses the Block Kit JSON natively. Problem solved.

The Intelligence Layer: The Claude API Call

Here's what the actual API call looks like:

The cache_control: ephemeral on the system prompt is doing heavy lifting here. The system prompt is 8,000+ tokens (scoring rubric, output templates, workflow instructions). Without caching, we'd pay full price on every API call. With caching, we get a 90% discount on repeated calls within a 5-minute window.

max_uses: 4 on the web_search tool caps token costs. Uncapped searches can blow up to 100K+ input tokens per call. Four searches gets 80% of the signal at 20% of the cost.

The "Holy Shit" Moment

I need to tell you about the first time this worked.

Super Bowl Sunday 🏈🎉. I'd been building the plumbing for a couple days — webhook routing, Slack parsing, all the boring infrastructure. The AI agent itself was still theoretical. I had written the prompt, but we hadn't hooked it up yet.

Late one night, I connected the final piece. A Harmonic alert came through: stealth founder, generic description, no company name yet. Just a LinkedIn profile.

I watched the Power Automate flow trigger. HTTP call to Claude. 15 seconds pass. 25 seconds. 35 seconds.

Then the Slack thread populated:

🔥 VERY INTERESTING — [Founder Name] (Stealth) — 8.7 / 10.0

The AI had pulled the founder's entire career history from LinkedIn. Found their previous company, acquired by CrowdStrike in 2018. Discovered they'd filed 3 patents on behavioral detection. Identified them as the original engineering lead for Falcon endpoint. Cross-referenced against our landscape — zero competitors in their specific approach. Delivered a structured verdict with specific reasoning and a recommended next step.

Total time: 37 seconds.

I just stared at it. This was work that would have taken me 20 minutes of LinkedIn stalking, Google searching, and pattern matching against companies we'd seen before. The AI did it in under a minute, and it caught details I would have missed.

That's when I knew this wasn't just automation. This was a different way of operating.

But it's not just the hits. The passes matter more, because that's where the time savings actually live.

An alert came through for another startup — "AI workflow automation notebooks for operations teams." The AI ran its research, found a 3-person team with no cybersecurity pedigree, a horizontal DevOps tool competing with Tines and Torq, and zero security-specific positioning.

⏭️ PASS — [Company Name] — 3.8 / 10.0

Three lines of reasoning. Done. That's a company I would have spent 10 minutes researching to reach the same conclusion.

I also tried to break it. I sent "blackcloak.com" to the Slack channel — BlackCloak is already in our portfolio. The AI recognized it immediately, flagged it as an existing investment, and passed in a few tokens. No wasted research, no confused analysis. It just knew.

What the AI Actually Does

When Claude receives a deal signal, it follows a strict workflow:

Classify. Is this a COMPANY (evaluate the startup), FOUNDER (evaluate the person), or SKIP (team discussion, not a deal)?

Research. Run 3-4 web searches: company overview and recent news, founder backgrounds, competitive landscape, and traction signals.

Synthesize. Pull together intelligence from the Slack event data, Affinity CRM enrichment, web research, and our landscape database for category context.

Score. Evaluate against TechOperators investment criteria. We're not publishing our full rubric — that's 17 years of pattern recognition encoded into software, and it's our competitive advantage. But the weights heavily favor team quality and founder-market fit. After four funds investing in cybersecurity since 2008, we've learned what predicts success.

Respond. Deliver structured analysis as a threaded Slack reply with findings, thesis fit assessment, and a recommended next action. For clear passes, the response is 3-4 lines. Partners don't need 200 words explaining why a mediocre company is mediocre.

The Meta Layer: We Built This With Claude

Here's the surreal part: we used Claude to build the system that runs on Claude.

The Power Automate flows, the data transformations, the webhook routing logic — we described what we needed, Claude wrote the expressions, we tested them, and shipped. This is how most people build with AI now.

Example:

Me: "The company name is in blocks[0].text.text but only if the block type is 'section'. How do I extract that in Power Automate?"

Claude:

Describe the problem, get the code, test it, move on. That's how we built this in a weekend.

The prompt engineering was iterative too. Early versions of the scoring prompt were too generous — everything scored 7-8. We added explicit calibration instructions. We also had to engineer directness: "If a company is a clear pass, say so in 3-4 lines and move on."

We also treat prompts like code. The full system prompt library lives in SharePoint, versioned and documented. When we refine the scoring rubric or add new edge cases, we update the document, and Power Automate pulls it into the API call. No redeploying flows, no hardcoded prompts buried in workflows.

The system improved with every iteration. Claude helped us debug Claude.

Cost: Less than a Zoom Call

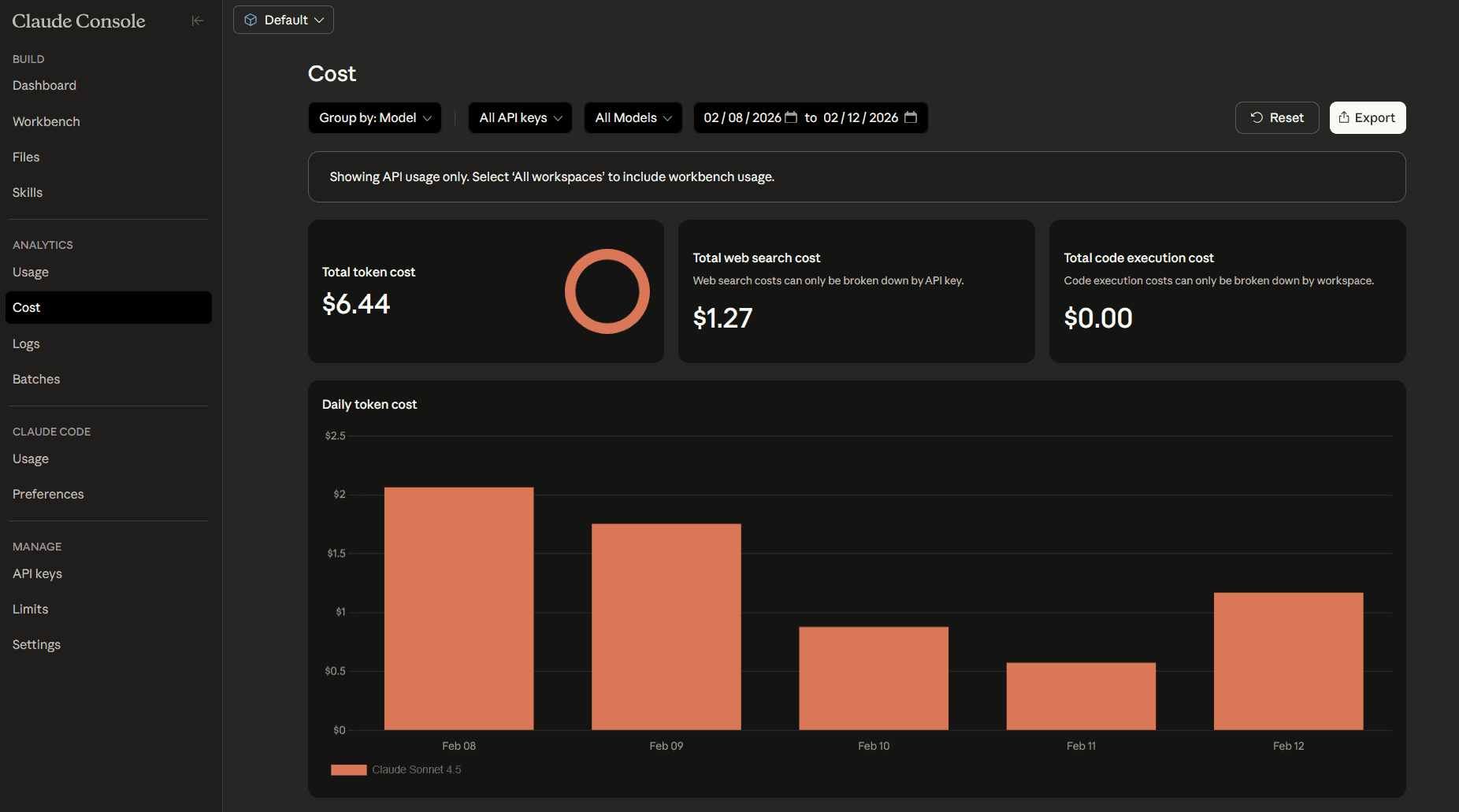

Final cost after optimization: ~$0.20 per deal. At 3-5 deals per day, the entire system runs for about $20/month.

That's less than a single Zoom meeting about whether to take a first call.

What Changed: Before and After AI Triage

2025 (manual triage): 209 companies auto-populated from PitchBook. Partners reviewed every single one. 14 flagged as "Very Interesting" — a 6.7% pass rate. 195 companies got partner time they shouldn't have.

Q1 2026 (AI triage): 47 companies processed through agentic research. 9 flagged as Very Interesting or Interesting — a 19% pass rate. Partners only review pre-qualified deals with a thesis already formed. Zero time wasted on the 81% that don't fit.

The signal-to-noise ratio tripled. Partner time shifted from triage to relationship building.

What This Is Not

This is not a replacement for real relationships with superstar founders.

The companies that return the fund don't come from pipeline alerts. They come from 25 years of operator networks — former colleagues going out on their own, security leads we've known since they were individual contributors, warm intros from founders we backed in prior funds who are now angels themselves.

The best cybersecurity founders are known quantities before they're on anyone's radar. The CrowdStrike VP who's been talking to us for 18 months about what's broken in identity. The Palo Alto principal engineer who keeps showing up at our CISO dinners. The Wiz security lead who just asked if they can shadow our portfolio companies to understand go-to-market.

That's where the real alpha lives. The AI doesn't source those relationships — we've been building them for decades.

What the AI does is make sure we don't miss signal while we're focused on the superstars. In 2025, while we were spending time with the 14 companies that mattered, we were also manually triaging 195 that didn't. That's time we could have spent deepening founder relationships, sitting in on portfolio company hiring interviews, or doing one more reference call on someone we're excited about.

The AI automates the 93% that's noise so we can focus on the 7% that's signal — and on the handful of superstar founders who will define the next decade of cybersecurity.

This is not fully automated dealmaking. Partners still lead every investment decision. The AI compresses qualification, but the relationship building, the reference calls, the product deep-dives, the final investment judgment — that's human. We've seen the pitches: "AI agent closes deals while you sleep!" That's not this. The AI does the work nobody wants to do. It surfaces the 7% that deserve human attention. Then humans take over.

Why We're Publishing This

Most VCs would keep this proprietary. We're publishing it for three reasons.

Secrecy isn't defensible. If this architecture works — and it does — other firms are already building something similar. The tools are public: Claude API, Power Automate, Slack. The moat isn't the system. It's the 17 years of cybersecurity investing patterns encoded in our scoring rubric and the operator networks that feed our deal flow. You can copy the plumbing. You can't copy the judgment.

It shows how a small team competes. LPs ask how a 4-person fund competes with firms that have 15-20 people. This is one answer. We automate the bottlenecks and spend our time on the 7% of deals that actually matter. We don't have more analysts. We have better systems. If we're going to call ourselves operator-first, we should build like operators.

Founders should know how we work. If you're building a cybersecurity company and using AI to reimagine your category, you want investors who do the same. We're not finance people who read about AI in the Wall Street Journal. We build with it. We shipped this in a weekend and have processed dozens of deals through it.

What's Next

We're expanding in two directions.

Stealth founder discovery. The Harmonic integration is working. We're seeing 3-5 high-signal founders per week leaving security unicorns — CrowdStrike, Palo Alto, Wiz — and going stealth. Same research and scoring framework, applied 12-18 months before there's a company to evaluate. We're building relationships with operators before they incorporate.

Continuous refinement. Every investment we make and every company we pass on gets logged. Partners provide feedback directly in the Slack thread — "too early," "incredible team but wrong category," "not actually cybersecurity." Over time, the scoring model learns which signals predict our decisions versus which ones are noise.

We're also exploring a second agent: portfolio monitoring. Same research framework, pointed at existing investments to track product launches, competitive shifts, hiring signals, and traction in real-time. If one of our companies hires a VP Sales from Snyk or gets mentioned in a Gartner report, we want to know within 24 hours.

Something Big Already Happened

If you're a founder: AI is how investors evaluate you now. Not just us — every firm building these systems. Before you raise, make sure your digital footprint tells the right story. LinkedIn, website, company filings — an AI agent will synthesize all of it in 60 seconds and form a thesis before a partner reads your deck. Make it accurate. Don't fabricate. AI cross-references everything.

If you're an investor still triaging deals like it's 2023: the tools are public. The barrier was never technical. But you won't get there with engineers alone or operators alone. The firms that combine real operating experience with technical chops — and layer those on top of reputation, access, and market knowledge — will build systems worth trusting. Everyone else will hire more associates.

We built an AI system so we could spend more time being human.

More meetups with founders. More reference calls. More product design sessions. More of the work that actually builds successful companies.

The best use of artificial intelligence, it turns out, is buying back time for the real kind.